Successful production of the first semiconductor chip designed by ChatGPT in the world proves the huge potential of AI. A few months ago, New York University researchers achieved a unique breakthrough in exploiting ChatGPT to design and develop a CPU without the need for a hardware definition language.

The research team was led by Dr. Hammond Pearce of New York University

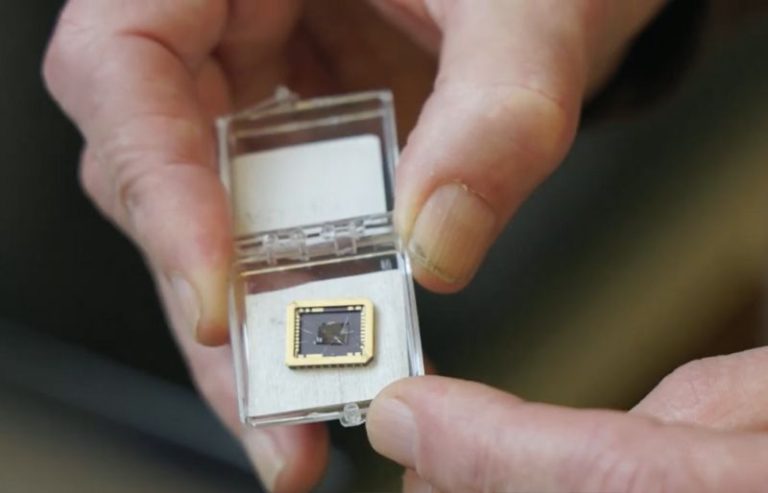

All they used was just plain English with definitions and examples describing the functions of semiconductor processors from which ChatGPT came up with design instructions for this chip. This chip is not a complete processor like Intel or AMD, but it is a component of the CPU, a logic chip within the architecture of the new 8-bit microprocessor.

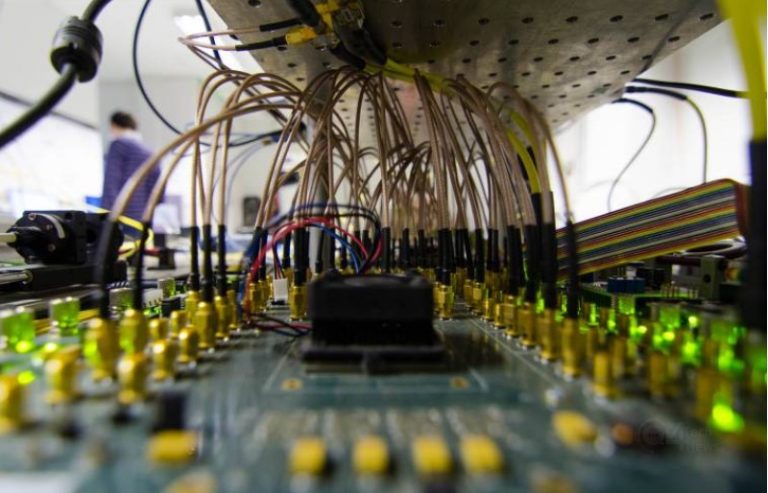

In the clip, the Core C1 chip is connected to another circuit board with a link to the screen used to operate several decorative lights on the pine tree. This is a demonstration of the effectiveness of using ChatGPT to design and develop semiconductors without having to be too proficient in hardware language HDL (Hardware Description Language) like Verilog.

Similarly, researchers from Georgia Tech University have leveraged ChatGPT to design computer circuits more quickly and efficiently. This process includes providing specific instructions for ChatGPT and creating designs based on available source code.

However, there are limitations in understanding hardware concepts and creativity in programming. The team is trying to train the model more deeply for IC design.

The model has difficulty connecting hardware concepts to actual source code due to the lack of specific training in IC design. By using language models to document source code, researchers can improve the training process.

This not only helps reduce costs but also reduces time and increases semiconductor chip development productivity. The ultimate goal is to train ChatGPT to reason and explore the design space to create comprehensive ICs. Although actual implementation still has many limitations.